Auto-scaling Azure SignalR Service with Logic Apps

Azure SignalR Service does not have auto-scale functionality out of the box. In this post I’ll implement my own auto-scaling using Azure Logic Apps.

Scaling Azure SignalR Service#

Microsoft promotes Azure SignalR Service as a simple way to scale SignalR implementations, but how does the service itself scale? The answer is Units: for every Unit the Standard_S1 sku accepts another 1,000 concurrent connections. To scale, bump the Unit count to a maximum of 100 (100k concurrent connections) using the Azure Portal, CLI, Azure Powershell or REST API.

Some caveats here are that the service actually only accepts Unit counts of 1, 2, 5, 10, 20, 50, 100. You can’t set a Unit count of, say, 3 or 60. Additionally, pricing includes a per unit, per day factor, and this day includes part-thereof, such that if you set a unit count of 100 you’ll be charged 100 x $AUD2.21 for that day, even if you immediatly roll back to 1 unit. Pricing here is not consumption based.

Will it auto-scale?#

I don’t want to scale it myself in the Portal, or by making remote calls, rather I’d like the service to auto-scale based on concurrent connection count, but the service does not offer this capability out of the box.

It doesn’t currently autoscale. Is it a blocker for you?

— Anthony Chu (@nthonyChu) July 6, 2019

As such, the driving forces of laziness and curiosity within me has led to the creation of a Logic App that will auto-scale SignalR Service for me, even if it’s not a great idea to do so.

Automate Azure with Logic Apps#

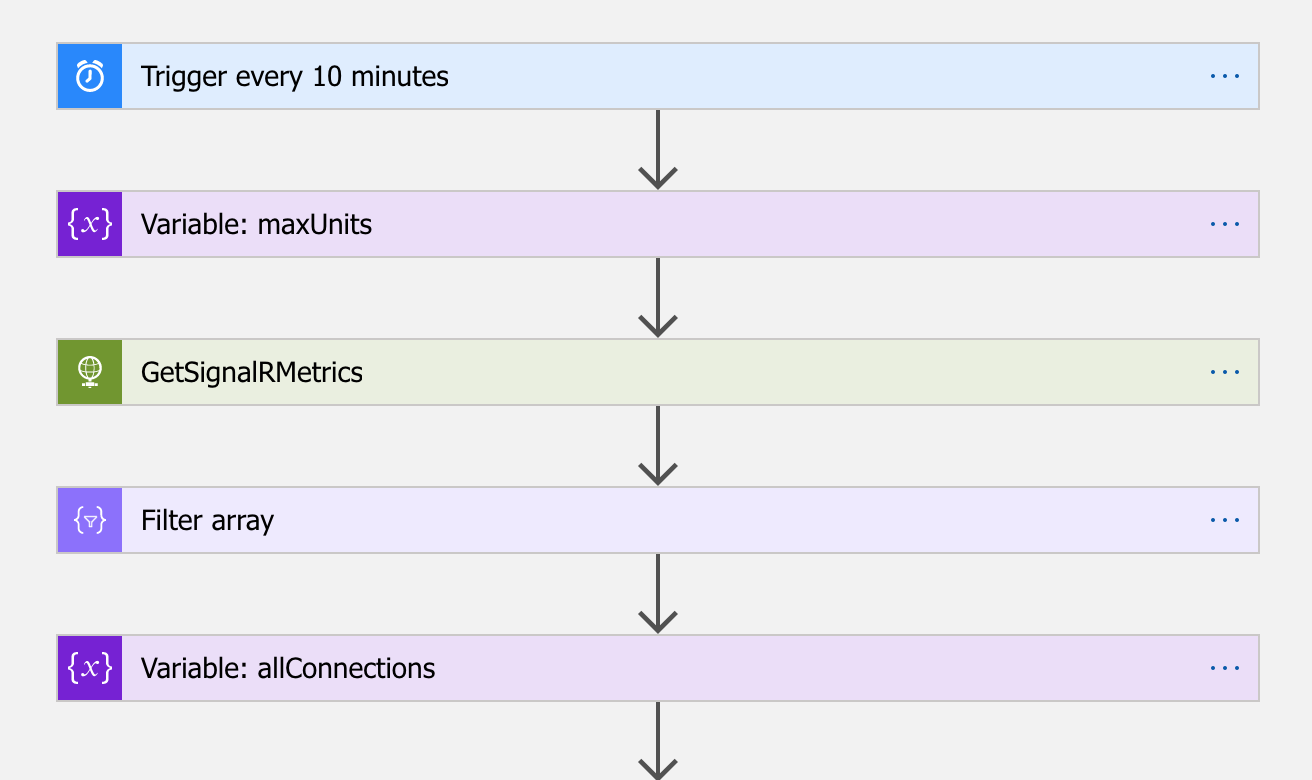

The source and usage is here, but let’s take a look at what this Logic App is doing.

Logic Apps doesn’t have a connector available for SignalR Service, so instead we’ll use the Azure REST API to query and modify it. There’s three REST end points we’re interested in;

- GET monitor/metrics/list to query how many concurrent connections there are

- GET signalr/get to query the current Unit setting and;

- PUT signalr/createorupdate to update the unit count.

Everything else in the Logic App are then steps to determine whether we need to call the PUT above and what Unit count we’ll set if we do.

How many connections until we scale?#

The formula I’m using to determine the number of units we need is as follows:

undefined(CurrentConnections + BaseConnections + Buffer) / ConnectionsPerUnit

where:

CurrentConnectionsis the maximum connections connected at any time since the last runBaseConnectionsreflects that we’ll always need at least 1 unit regardless of connection countBufferis how close to the maximum connections per unit we’ll get before scaling. I set this to 100 initially so, for example, reaching 900 connections would add another unit. After testing showed that limits are soft and counts would go up to 10% over, so probably this could be closer to, or just 0.ConnectionsPerUnit= 1000

The formula produces an ideal Unit count, however Azure SignalR Service allows us only Unit counts of 1, 2, 5, 10, 20, 50 or 100, so we’ll choose one that’s greater or equal to our ideal count. As a side note, I also found during testing that 0 is a valid unit count, however doing so blocks both clients and servers from connecting to the service.

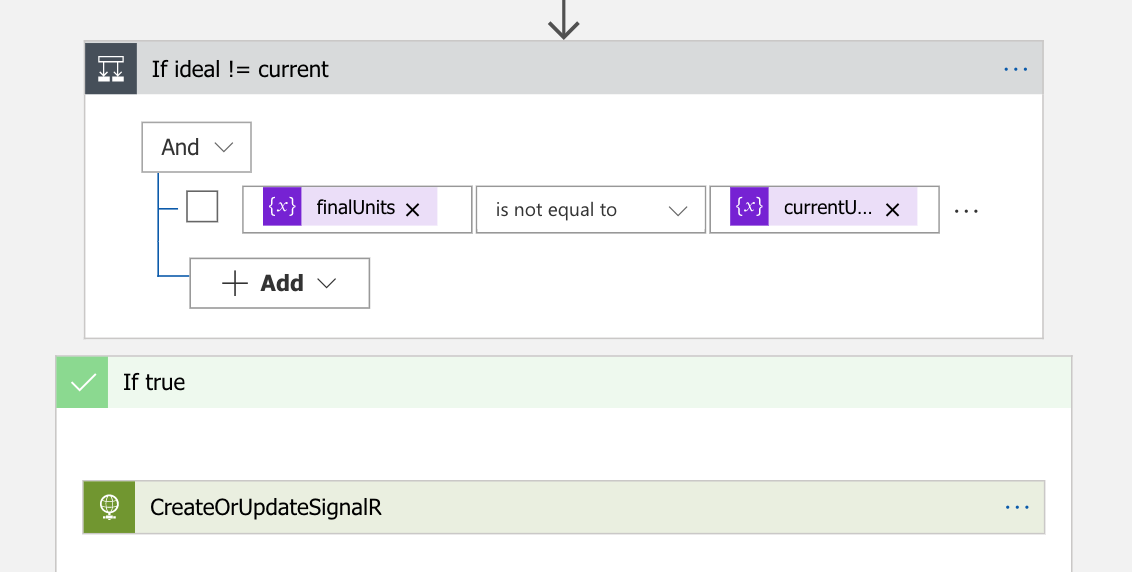

Scaling up and down#

Once we’ve determined what unit count we need, we’ll compare it to what the current SignalR Service unit count is. If they are equal, then there’s nothing more to do. If they’re different, we’ll call the createorupdate endpoint with our new unit count, at which time the service will reload, disconnecting all current connections with the following HubException:

Connection terminated with error: Microsoft.AspNetCore.SignalR.HubException: The server closed the connection with the following error: ServiceReload

Whilst disconnection sounds alarming, this happens often enough in practice that client applications should already have re-conection implementations built in.

Parameterising for ARM template deployment#

Editing Logic Apps in the Designer is frustrating to say the least, so converting variables first to Workflow Definition Language Parameters and then into ARM template parameters is time consuming but lets us pass parameters and update the Logic App from the commandline. Embedding ARM parameters directly into Logic App definitions is not a great idea.

We can then call deploy the Logic App via CLI and have it run and auto-scale every 30 minutes like so:

bashaz group deployment create \ --resource-group yourResourceGroup \ --template-file template.json \ --parameters @parameters.json \ --parameters scaleInterval=30

Would I do it again?#

Now that it exists, I can auto-scale SignalR Service pretty effortlessly but the lack of granular control over unit count and no consumption pricing makes scaling up costly and scaling down before the end of a given day pointless.

Building this in Logic Apps was a learning experience, but the Designer and Workflow Definition Language had some short-comings that made progress slow and frustrating. This included whacky workarounds for converting floats to integers and WDL not having floor or round functions. I found myself constantly fighting the Designer when it failed to display dynamic variables, inserted superfluous foreach steps, blocked renaming of steps once they are referenced by another, and had no editing of parameters. These problems meant I more often than not needed to directly edit the underlying JSON.

It’d be interseting to see how the same functionality could be achieved using Az PowerShell modules inside an Azure Function. Update 16/07 Matt Brailsford has done exactly that, and has posted what I concur is a much cleaner and maintanable approach using Azure Powershell.

stafford williams

stafford williams