Continuous Deployment to Kubernetes - Part 2

In an earlier post I described continuous deployment to Kubernetes using Microsoft Tye to abstract most of the heavy lifting. In this post I’ll describe a way of splitting application code from Infrastructure as Code (IaC) across repositories whilst maintaining continuous deployment.

This post is part of a series on Continuous Deployment to Kubernetes:

- Part 1 - Deploying using Azure Pipelines and Microsoft Tye

- Part 2 - Deploying using Github Actions and Kubernetes Manifests

- Part 3 - Deploying using GitOps (Coming soon)

Architecture#

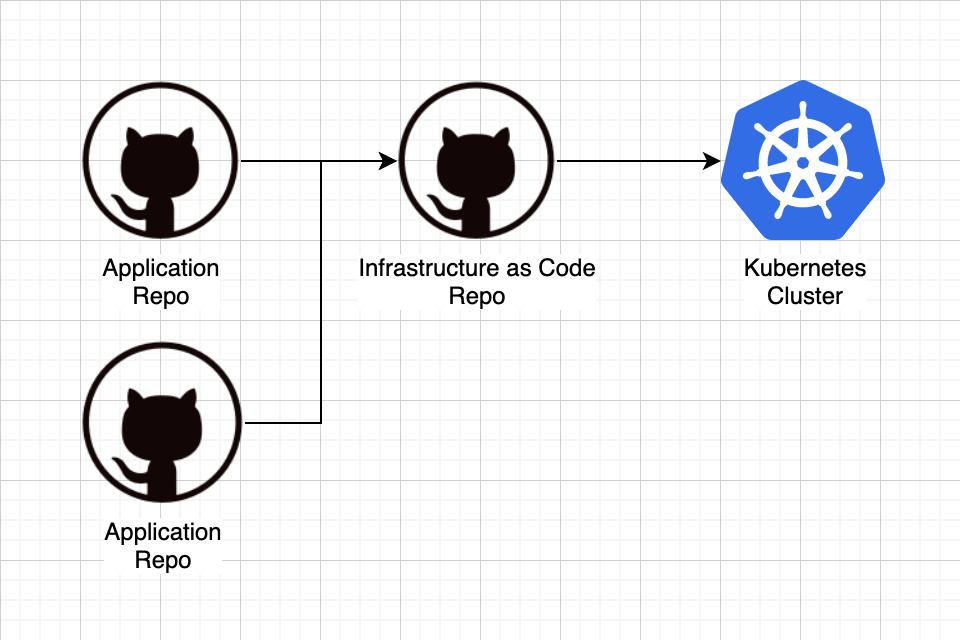

I have multiple git repositories that represent the source code of applications I want to run in my cluster. I have another git repository that contains the Kubernetes resource manifests that describe which, and how, applications run in my cluster. When a commit is merged into an application repository’s main branch, build artifacts should be available to, and deployed by, my IaC repository to the cluster. I will use Github Actions to build and deploy docker containers to my Kubernetes cluster.

Application Workflow#

I’ll again use Digital Icebreakers as an example and you can see the full workflow here. This workflow triggers only on push to the main branch, which can only occur via Pull Request due to configured branch protection. The first steps are similar to the previous approach: restoring dependencies and testing the application. Then…

yaml

jobs:

deploy:

...

steps:

...

- name: Login to Docker Hub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: push container

run: dotnet tye push -v Debug

…we authenticate with the container registry, in this case Docker Hub, and again use Microsoft Tye to do a bunch of things for us in a single action. dotnet tye push will:

- Restore any missing nuget packages

- Build the csproj

- Create a Docker file

- Build a Docker image

- Tag the image via GitVersion

- Push the image to Docker Hub

Triggering The IaC Repo#

Once the container registry has our tagged image we’ll need to let the IaC repository know a new image is ready for deployment. Github’s repository_dispatch is an event we can trigger workflows with via the Github API. In this event we can supply values in a client_payload object. In the steps below we retrieve the current GitVersion and send it along with an event_type of digital-icebreakers as an API request to the IaC repo - in this case the private repository staff0rd/deploy.

yaml

jobs:

deploy:

...

steps:

...

- name: get version

run: |

echo "semantic_version=$(dotnet nbgv get-version -v AssemblyInformationalVersion | tr + -)" >> $GITHUB_ENV

- uses: octokit/request-action@v2.x

with:

route: POST /repos/{owner}/{repo}/dispatches

owner: staff0rd

repo: deploy

event_type: digital-icebreakers

client_payload: |

semantic_version: ${{ env.semantic_version }}

env:

GITHUB_TOKEN: ${{ secrets.DEPLOY_REPO_TOKEN }}

IaC Workflow#

In the IaC repository we have Service and Deployment manifests similar to what Tye would have generated with it’s deploy command. We might also have references to other things like secrets and other environment variables that the application repository does not know about.

Each of our jobs represents the deployment of a single application. The repository_dispatch event we raised included an event_type, and in the IaC workflow this maps to the variable github.event.action. We can use this variable to decide which job to run as seen in the line if: github.event.action == 'digital-icebreakers'.

The workflow then has three steps;

- Checkout the IaC source

- Authenticate with Kubernetes

- Apply the particular manifests

yaml

name: deployments

on: repository_dispatch

jobs:

deploy-digital-icebreakers:

name: Deploy Digital Icebreakers

if: github.event.action == 'digital-icebreakers'

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- uses: azure/k8s-set-context@v1

with:

method: kubeconfig

kubeconfig: ${{ secrets.KUBECONFIG }}

- uses: Azure/k8s-deploy@v1.3

with:

manifests: |

k8s/digitalicebreakers/deployment.yml

k8s/digitalicebreakers/service.yml

images: "staff0rd/digitalicebreakers:${{ github.event.client_payload.semantic_version }}"

kubectl-version: "latest"

We specify an image with the version tag we received in the client_payload. The Azure/k8s-deploy task will replace references to the staff0rd/digitalicebreakers image in the Deployment resource with the fully-qualified image name specified in the step’s images property.

Manually Triggering Re-Deployment#

The IaC repository’s workflow is triggered by the application code’s workflow which importantly communicates the image tag we expect to be deployed. This means we can’t manually trigger a deployment using something like workflow_dispatch as the action wouldn’t know which tag to deploy. To resolve this, I’ve added a workflow_dispatch triggered workflow to the application code repository.

As seen below, this workflow is similar to the one triggered on merge to the main branch, but doesn’t build, and only does the necessary steps to determine the GitVersion so it can raise the dispatch_workflow event.

yaml

name: Deploy existing

on:

workflow_dispatch:

jobs:

deploy:

name: Deploy

runs-on: ubuntu-latest

steps:

- name: Checkout git repository

uses: actions/checkout@v2

with:

fetch-depth: 0 # avoid shallow clone so nbgv can do its work.

- name: Setup .NET Core

uses: actions/setup-dotnet@v1

with:

dotnet-version: 3.1.301

- name: Install dotnet tools

run: dotnet tool restore

- name: get version

run: |

echo "semantic_version=$(dotnet nbgv get-version -v AssemblyInformationalVersion | tr + -)" >> $GITHUB_ENV

- uses: octokit/request-action@v2.x

with:

route: POST /repos/{owner}/{repo}/dispatches

owner: staff0rd

repo: deploy

event_type: digital-icebreakers

client_payload: |

semantic_version: ${{ env.semantic_version }}

env:

GITHUB_TOKEN: ${{ secrets.DEPLOY_REPO_TOKEN }}

Conclusion#

Using this approach we can store our Kubernetes manifests in a separate repo from our application code. When an application successfully builds and deploys a docker image, we can trigger our IaC repository to deploy this latest image to our cluster. We can still use Microsoft Tye to do a chunk of work but stop short of using it to deploy to Kubernetes - having the manifests in source incurs the responsibility of managing and configuring them ourselves but in turn gives us greater control of how our applications are are deployed and scaled in Kubernetes.

This post is part of a series on Continuous Deployment to Kubernetes:

- Part 1 - Deploying using Azure Pipelines and Microsoft Tye

- Part 2 - Deploying using Github Actions and Kubernetes Manifests

- Part 3 - Deploying using GitOps (Coming soon)

stafford williams

stafford williams