Extending Serverless Framework for Alibaba Cloud

With a continuing interest in Serverless Framework, I decided to leverage its provider-agnostic approach to explore building and deploying an application to Alibaba Cloud.

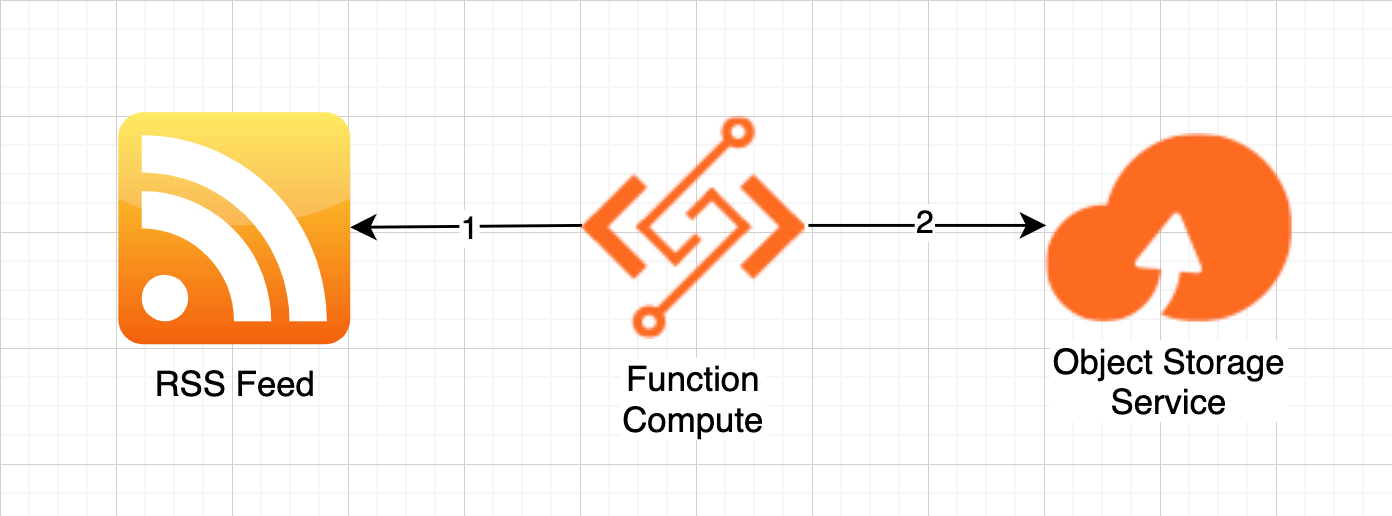

Architecture

The Alibaba Cloud version of the application will use Function Compute to make a call every hour to an RSS Feed, parse the results and, persist the output in Open Storage Service (OSS). The source for this application is here.

Configuring the Cloud Environment

If you haven’t already, you’ll need to activate the following products on your Alibaba Cloud account:

Create a new user in RAM with the following auth policies:

- AliyunOSSFullAccess

- AliyunRAMFullAccess

- AliyunLogFullAccess

- AliyunFCFullAccess

Note: Ālǐyún is pinyin for Alibaba Cloud.

Put the access key id, and access key secret for the user, along with your account id in a file named ~/.aliyuncli/credentials. It’ll look something like this:

ini

[default]

aliyun_access_key_secret = <access-key-secret>

aliyun_access_key_id = <access-key-id>

aliyun_account_id = <account-id>

Configuring Serverless Framework

I prefer to use TypeScript, however Serverless Framework’s template for typescript, aws-nodejs-typescript, will create a severless.ts which is only compatible with provider: aws. I’ll create the project using the template, then delete serverless.ts and create a serverless.yml.

Alibaba Cloud functionality is added to Serverless Framework via a plugin, so to install we can run

css

serverless plugin install --name serverless-aliyun-function-compute

The most basic config for both the webpack and aliyun plugin is as follows. package.artifact is included as a work-around for a known conflict between the plugins.

yaml

service: headlines-aliyun

frameworkVersion: "2"

custom:

webpack:

webpackConfig: "./webpack.config.js"

includeModules: true

plugins:

- serverless-webpack

- serverless-aliyun-function-compute

package:

artifact: ./serverless/headlines-aliyun.zip

provider:

name: aliyun

runtime: nodejs12

region: ap-southeast-2

credentials: ~/.aliyuncli/credentials

By default the plugin will attempt to deploy to the region cn-shanghai, however regions in mainland China require Real-name registration. To avoid this we’ll deploy to Sydney (ap-southeast-2).

At this point the template can be built and inspected with:

go

serverless package

Or built and deployed with:

undefinedserverless deploy

Implementing the Function

Functions can be added to serverless.yml similar to how we would with the aws provider:

yaml

functions:

parseFeed:

handler: src/parseFeed.handle

A first attempt that just reads from the RSS feed could look like this:

typescript

export const handle = async (

event,

context,

callback: (err: any, data: any) => void

) => {

const feed = await new Parser().parseURL(

"http://feeds.bbci.co.uk/news/world/rss.xml"

);

callback(null, `Retrieved ${feed.items.length} items from ${feed.title}`);

};

In Function Compute, the third parameter received by our function when it is triggered is the callback (see Parameter callback).

The first parameter of callback is the error object, which if populated will indicate the function failed.

The second parameter consists of the data that is returned in the case of no error.

The callback must be called at the end of the function or Function Compute will not know that the function has completed executing and, the execution will timeout in an error state at the default 30 second point.

Extending the Plugin

At the time of writing, the only events that serverless-aliyun-functin-compute supports are API Gateway (http) and Object Storage Service (oss).

Resource Orchestration Service (ROS) - Alibaba Cloud’s resource templating system - defines other triggers in the docs for ALIYUN::FC::Trigger. Notably we are interested in TriggerType: timer.

The source for serverless-aliyun-function-compute is pretty easy to follow, so I’ve raised this PR and added support we need. The change allows us to specify a timer event in our function declaration which will be converted to a ROS template when we package or deploy.

yaml

functions:

parseFeed:

events:

- timer:

triggerConfig:

cronExpression: "0 0 * * * *" # every hour

enabled: true

The CronExpression uses six fields and an online editor for that format can be found here.

Creating a Bucket

The plugin also does not implement a Resources section, so to persist our data to a bucket, we’ll need a way to create a bucket. We could do it click-ops-style, but instead let’s try the aliyun-cli to achieve this. However, I don’t want to install it, and it’s written in Go, so, Docker to the rescue:

bash

# build aliyun-cli

FROM golang:alpine as builder

RUN apk add --update make git; \

git clone --recurse-submodules \

https://github.com/aliyun/aliyun-cli.git \

/go/src/github.com/aliyun/aliyun-cli;

WORKDIR /go/src/github.com/aliyun/aliyun-cli

RUN make deps; \

make testdeps; \

make build;

# create image without build dependencies

FROM alpine

COPY --from=builder /go/src/github.com/aliyun/aliyun-cli/out/aliyun /usr/local/bin

ENTRYPOINT ["aliyun"]

We can build the Dockerfile above with docker build -t aliyun-cli . and then run configure to setup the credentials:

bash

docker run -v $PWD/data:/root/.aliyun aliyun-cli configure set \

--profile default

--mode AK \

--region ap-southeast-2 \

--access-key-id <ACCESS_KEY_ID> \ # from ~/.aliyuncli/credentials

--access-key-secret <ACCESS_KEY_SECRET> # from ~/.aliyuncli/credentials

With the credentials set we can use the CLI to manage our infrastructure. oss mb will create a bucket - note that bucket names must be unique across all users.

bash

# create a bucket called 'my-unique-named-bucket'

docker run -v $PWD/data:/root/.aliyun aliyun-cli oss mb \

oss://my-unique-named-bucket

# list all my buckets

docker run -v $PWD/data:/root/.aliyun aliyun-cli oss ls

Integrating the Bucket

A JavaScript client for OSS exists and we’ll install it with:

css

npm install ali-oss --save

The code to persist our data to the bucket looks like this:

javascript

const config = {

region: `oss-${context.region}`,

accessKeyId: context.credentials.accessKeyId,

accessKeySecret: context.credentials.accessKeySecret,

bucket: process.env.BUCKET_NAME,

stsToken: context.credentials.securityToken,

};

const client = new OSS(config);

await client.put(fileName, Buffer.from(JSON.stringify(body, null, 2)));

The context object is available as the second parameter in the handler’s arguments. The region for OSS needs to be determined from the OSS Region mappings. If you attempt to use the normal, non-OSS region, you’ll get an unhelpful error that looks similar to this this:

2021-09-27T13:32:57.449Z f407f500-6bc7-4aba-b65d-72658f911f72 [error] Failed to put Error [RequestError]: getaddrinfo ENOTFOUND my-unique-named-bucket.ap-southeast-2.aliyuncs.com, PUT http://my-unique-named-bucket.ap-southeast-2.aliyuncs.com/2021/9/1/1332.json -1 (connected: false, keepalive socket: false, agent status: {“createSocketCount”:1,“createSocketErrorCount”:0,“closeSocketCount”:0,“errorSocketCount”:0, “timeoutSocketCount”:0,“requestCount”:0,“freeSockets”:{},“sockets”:{“my-unique-named-bucket.ap-southeast-2.aliyuncs.com:80:”:1},“requests”:{}}, socketHandledRequests: 1, socketHandledResponses: 0)

css

headers: {}

at requestError (/code/node_modules/ali-oss/lib/client.js:315:13)

at request (/code/node_modules/ali-oss/lib/client.js:207:22)

at processTicksAndRejections (internal/process/task_queues.js:97:5) {

name: 'RequestError',

status: -1,

code: 'RequestError'

}

I’ve passed through the BUCKET_NAME environment variable, however the plugin also doesn’t currently support this. Considering ROS does support it, I’ve added it via another PR. Using this change we can specify environment variables to make available to our deployed function:

yaml

functions:

parseFeed:

environmentVariables:

BUCKET_NAME: "${self:custom.bucketName}"

To write to the bucket, a policy should be set to Allow the function’s execution role access.

The plugin’s current implementation means the policy will not be updated after its creation on the first deploy.

To update it after the first sls deploy, we’d need to edit it manually, or, sls remove it and sls deploy again.

yaml

provider:

ramRoleStatements:

- Effect: Allow

Action:

- oss:PutObject

Resource:

- "acs:oss:*:*:${self:custom.bucketName}/*"

Conclusion

With the above, our application, once sls deployed, triggers once an hour and persists the RSS feed results. We had to make two changes to the plugin code - one to support the timer event and one to pass through environment variables to the function. Whilst it is likely possible to implement a Resources section that will accept ROS templates and manage the CRUD for these resources during sls deploy - instead we used aliyun-cli to create the bucket.

ROS appears to have AWS-stack-esque functionality however the plugin doesn’t use stacks meaning operations like renaming functions in serverless.yml will not result in those functions being dropped on the next sls deploy, but will result in new functions be created. With only two events implemented, the serverless-aliyun-functin-compute plugin feels more like a proof-of-concept rather than something worth considering for production.